Unless you’ve been living under a rock—an impossibly cozy, privileged, particle-filtering rock—2020 has probably opened your eyes to how far we still have to go to achieve a truly fair society. COVID-19 has revealed and reinforced existing oppression, inequality, and vulnerability, from systemic racism and a lack of affordable healthcare to a spike in domestic violence.

And if like me, you’ve been lucky enough to comfortably and non-essentially continue working from home, safe, knowing if you got sick your hospital bill would be covered and you’d never be denied treatment because of the color of your skin, this year has probably also made you painfully aware of how you’ve been part of the problem.

So, the bare minimum we can do right now is to get things right in our respective fields of work, using little slices of expertise and influence as responsibly as we possibly can.

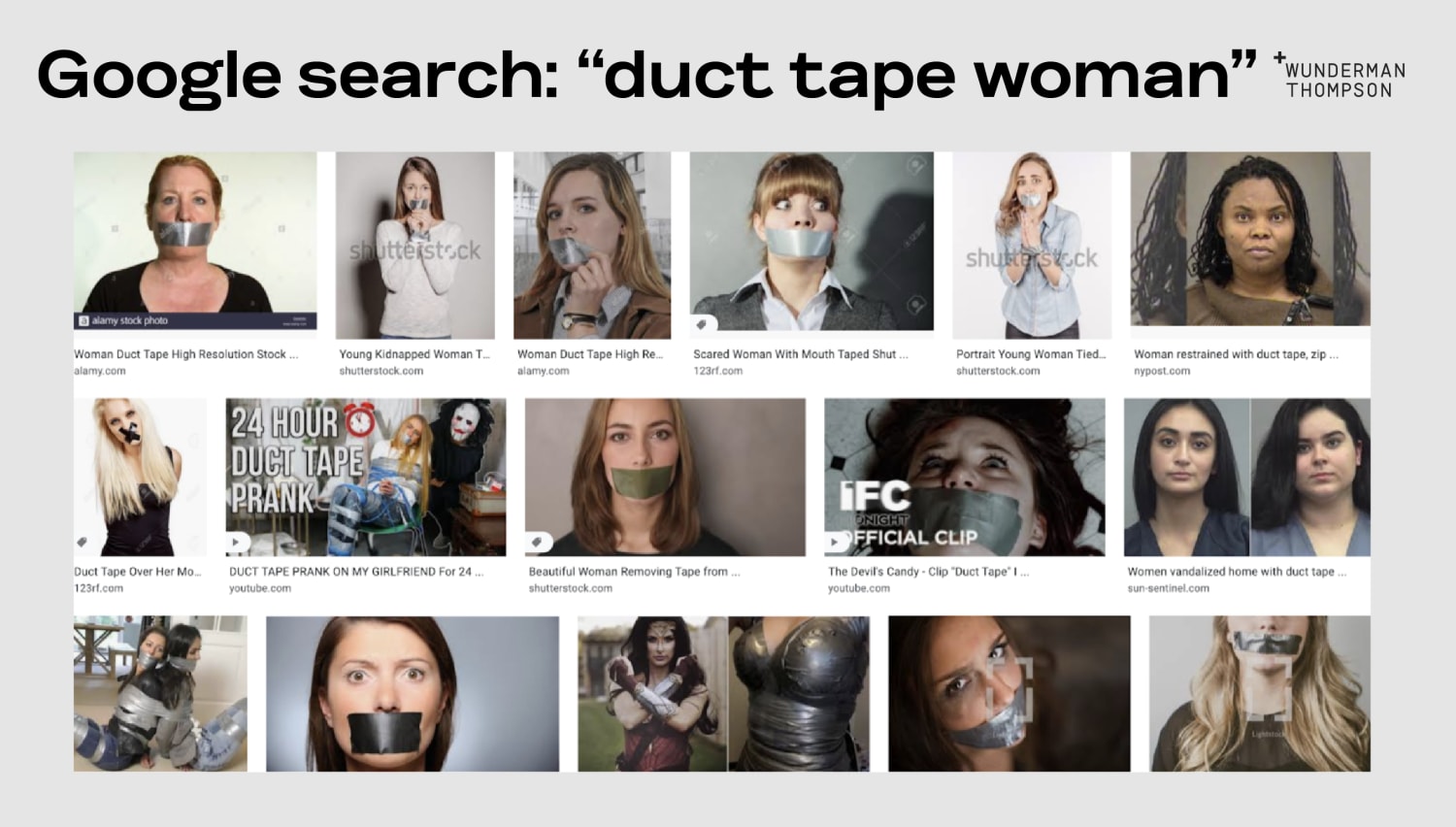

In the field of data, one way to do this is to dig into and understand the dark side of AI. We have to ensure the data our models are trained on isn’t bad, biased—or both, making harmful decisions and judgments on our behalf, with predictive policing one particularly topical example.

Even as a data scientist, who spends big chunks of her time scrubbing and prepping datasets, the idea of potentially harmful AI bias can feel a little abstract; like something that happens to other people’s models, and accidentally gets embedded into other people’s data products.

That was the case for me—until a few weeks ago.